Introduction

- An operating system is a program that acts as a interface between a user and computer.

- Its provides an environment for users in which programs can be executed in efficient and convenient manner.

Components of Computer

- The hardware

- Operating System

- Application Program

- The User

Operating System can be viewed as—

- Control Programs: manages the execution of user program to prevent errors and improper use of computer.

- Kernal: A program that running all times.

Typical Services provide by OS—

- Program Creation

- Program Execution

- Controlled Access to Files

- Access to I/O devices

- Access to System

- Error detection and Response

- Accounting

Function of Operating System—

- Process Management

- File management

- Memory Management

- Resource and Device Management

- Protection and Security of Data

Types of Operating System–

1.Batch System:

- Jobs with similar needs are batched together to speed up the processing.

- Resident operating system allowed system to automatic job sequencing.

- During executing of job, lack of interaction between user and the job.

- Low level of CPU utilization.

2.Multiprogrammed Systems:

- At a time several jobs are put in memory.

- OS picks up one of the job and execute in memory.

3.Time Sharing System:

- also called as Multitasking.

- It is a logical extension of Multiprogramming.

- Users can interact with each program while it is running whenever switching occurs.

- It exploits CPU scheduling and Multiprogramming to provide a small portion of time shared system to users.

4. Parellel System:

- also called Multiprocessors.

- More than one processor which are in closed communication, shares the computer bus, the clock, memory(when required), and peripheral devices.

- Fault tolerant system

- Symmetric Multiprocessing Model– each processor runs an identical copy of OS and these copies can communicate with each others when required.

- Asymmetric Multiprocessing Model — a specific task is assigned to each processor and a Master processor controls the system.

5.Distributed System:

- Each processor contains its own local memory.

- The processor does not share memory or clock.

- Its a loosely coupled system.

6. Real Time System:

- Real Time System controls the scientific system(experiments).

- Used in medical imaging system, controlling industrial system and display etc.

- Limited time constraints.

- Hardware R.T.S– Guarantees to complete critical task on time;

- Software R.T.S– Less Restrictive type.

System Call:

- System call is occured whenever a application program needs a service of Operating System.

- It provides interface between Process and Operating System.

- It is accessed by a program through a high level API such as Win32 API(window), POSIX API(Unix, Linux Mac OS ), Java API (Java Virtual Machine) etc.

Types of System Call:

- Process Control

- File Management

- Information Maintenance

- Device Management

- Communication

System Program:

- provides a environment for developing and execution of program.

System Program Category:

- Programming language support

- Loading and Execution of Program

- File Management

- File modification

- Status Information

- Communication

SYSGEN: This program get the information of specific configuration of hardware.

BOOTING: Starting process of computer by loading the KERNAL.

BOOTSTRAPING PROGRAM: Codes are stored in ROM and has a ability to locate KERNAL, load it in Memory and start execution.

Processes:

- program in execution called process

- Process contains Stack, Temporary Data, and a data section containing global variables.

- Process is an active entity whereas program is passive.

Five state Mode:

Process Control Block:

- also called task control block

- in OS process is represented by PCB.

Process Scheduling:

To maximize the utilization of CPU Process Scheduling is used

- Job Queue: it is the set of all the processes in the system

- Ready Queue: it is the set of all the processes in the main memory, that is ready and waiting to execute

- Device Queue: it is the set of all the processes that is waiting for particular devices.

Schedulers:

The selection of processes from scheduling queue is done by schedulers.

Types of schedulers

- Long Term Schedulers:

- New to Ready

- It is also called as Job Schedulers

- It selects processes from the job pool and loads them into memory for execution.

- It controls the degree of multi programming.

Process can be—

- Input/Output bound process: It spends more of its time in doing Input/Output than spending time in computation.

- CPU bound: It generates input/ output request frequently using more of times in computation.

2. Short Term Schedulers:

- CPU schedulers

- It selects the process that is ready to execute

- It must select a new process for the CPU frequently

- If all the processes are I/O then ready queue will be empty.

3. Medium Term Schedulers:

- It removes the processes from memory that leads to reduce the degree of multi programming.

- After sometimes process can be continued where it is left off is called Swapping

Context Switch:

Saving the states of old process during the switching of CPU to another process and loading the saved state for new process is called Context Switching.

Fork System Call: There are 2n -1 child process for given n.

Threads:

- It is the light weight process (LWP) , is a basic unit of CPU utilization.

- It contains thread id, a program counter, a register set and a stack.

- It shares code section, data section, and other OS resources with other threads belonging to the same process.

Process vs Threads:

Similarity:

- Like processes, thread shares CPU and only one thread active at a one time.

- Like processes, threads within process execute concurrently.

- Like processes, thread can create children

- If one thread is block other thread can run.

Difference:

- Unlike process, threads are not independent of one another

- Unlike process, all threads can access every address

- Unlike process, threads are design to assist one other but process may or may not.

Suspending a process leads to suspending all the threads.

Spawn is a state with a change in the thread state which is

- block

- unblock

- finish

Types of threads:

- User level Thread:

- it is implemented in user level libraries rather than system call

- does not need to call OS during thread switching

- Kernal know nothing about user level threads

- User level thread can be implemented in an OS that does not support thread.

- User level thread does not require to modify OS

- it can be represented by Program Counter, register, stack, and small control block

- Fast and efficient

Disadvantage:

- Lack of co-ordination between threads and OS Kernal

- process get one time slice as a whole whether it has one thread or more threads

- Process is blocked if one thread causes fault

2. Kernal level thread:

- Kernal know about and manages threads

- No run time system is needed

- Kernal has thread table which that keeps track of all threads

- OS kernal provides system call to create and manage threads

- Full knowledge about threads

- Hardwired Support

- Independent, ineficient, context switching slower

- Execution scheduling difficult

- Context switching and sharing is the advantage of Kernal level thread

- Security and blocking is the disadvantage of Kernal level thread

CPU Scheduling:

Turn Around Time = Completion time – Arrival Time

Waiting Time = Turn Around Time – Burst Time

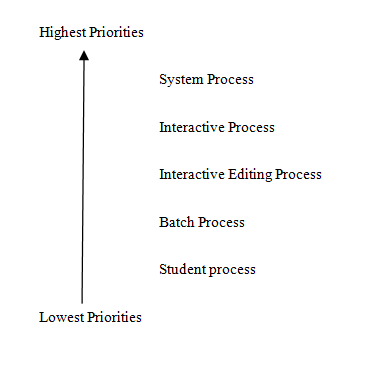

- Multilevel Queue Scheduling: This scheduling is created for a situation where processes are classified into different groups.

- It partition the ready queue into several separate queue

- Each queue has its own scheduling algorithm

- Foreground—–Round Robin (Time slice 80%)

- Background—–First come First serve (time slice 20%)

- In this strategy, processes at the bottom level in ready queue suffers from starvation.

2. Multilevel Feedback Queue Scheduling (MFQS):

- A process can move between various queue (aging is implemented)

- Process is separated with different CPU burst characteristics.

- If a process takes too much CPU time , it is moved to lower level priority queue.

- I/ O bound and Interactive process reside in higher priority queue.

- A process that wait too long in a lower priority queue, moves to higher priority queue.

- No starvation

3. Round Robin Scheduling:

- Round Robin Scheduling is preemptive

- If there are n Processes in the ready queue and time quantum is q then each process will get 1/n of CPU times where at most time is q.

- each process must wait no longer than (n-1)*q time unit.

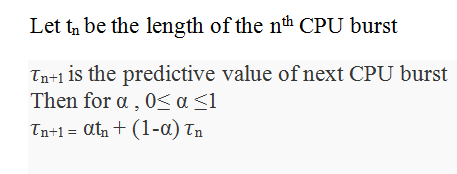

4. Shortest Job First Scheduling:

The next CPU burst is calculated as the exponential average of the measured length of previous CPU burst

Process Synchronization:

Process:

- Co-operative process

- Independent process

Need for Synchronization: In multi programming system, some process perform read operation and some process write operation simultaneously that leads to data inconsistency. Due to this process synchronization needed.

Problem of Synchronization:

- Inconsistency

- Printer- spooler Doemon problem

- Loss of data

- Deadlock

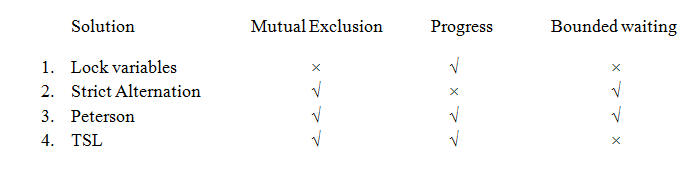

Mutual Exclusion:

If a process Pi executing in its critical section, then no other process can be executing in their critical section.

Progress:

If more than one processes want to go inside the critical section, then the decision of which process will go inside the critical section will be decided by the process which wants to use critical section.

Decision can not be delayed upto infinite time.

Ex. Process Pi will not decide whether process Pj will go inside critical section or not.

Bounded Waiting:

No process should have to wait forever to enter into critical section. There should be a bound of giving chance to other process to enter into critical section.

Solutions:

- Software Type

- Lock variables

- Strict Alternation (Decker Algorithm)

- Peterson Algorithm

2. Hardware Type

- Test ans Set instruction set

3. Operating system type

- Counting Semaphore

- Binary Semaphore

4. Compiler and Programming language support type

- Monitor

Semaphore:

- Counting Semaphore (-∞ to ∞)

- Binary Semaphore (0 or 1)

Monitor:

It is a collection of variables, conditional variables, and procedures which is grouped together for special kind of module and packages. Only one process can be get inside the Monitor.

Deadlock Avoidance :

Banker’s Algorithm can be used to avoid Deadlock.

Formula:

Current Available = Total- Current Allocation

Remaining Need = Max. Need – Current Allocation

Memory Management

Functionality is to allocating and dellocating the memory to the process

Goal is to efficient utilization of memory by minimizing the internal and external fragmentation.

Addressing in Memory

- Word addressable

- Byte addressable

Loading or Linking

- Static

- Dynamic

- Loading is the process of loading the program from secondary memory to main memory in order to start the execution of process

- Static Loading : Loading the entire program in memory before the starting of execution. Efficient utilization of memory whether or not to load entire program into memory. Program execution is faster.

- Linking: All the modules or functions of the program

- Dynamic Loading : Loading a program into memory at run time.Efficient utilization of memory. Program execution is slower. Dynamic loading is used according dynamic linking is used.

Dynamic Loading and Linking is used as default.

Address Binding

The association of program instruction and data to the actual physical memory location.

- Compile time address binding: If the compiler takes the responsibility of association of program instruction and data binding. Compiler time address binding is done before loading the program into memory.Compiler needs to interact with OS memory manager to perform compile time address binding.

- Load time address binding: Load time address binding is done OS memory manger (Loader). This address binding is done after loading the program into memory.

- Dynamic/ Execution time address binding: This type of address binding is done at time of program execution. The address binding will be postponed even after loading the program into memory.

Dynamic is the default type of address binding.

Memory Management Technique

- Contiguous

- Fixed Partition Scheme

- Variable partition Scheme

2. Non- Contiguous

- Paging

- Multilevel Paging

- Inverted Paging

- Segmentation

- Segmented Paging

Virtual Memory

Virtual memory is the technique of execution of process whose size is greater than main memory.

- valid-invalid bit (1= in memory, 0= not in memory)

- during address translator if valid- invalid bit in page table entry is 0 => page fault

- Effective Access time = (1-P)m + P × page fault service time (ms)

m= memory access time, P= page fault rate (probability of page fault), (1-P)= page hit ratio

Page replacement Algorithm

- FIFO: Replace the page that has been brought first into memory

- Optimal: Replace the page that will not be used for longest period of time

- Least recently used (LRU): Replace the page which has least recently used.

Frame Allocation

Depending upon the size of process, System allocate number of frame to process.

Let p1, p2, p3…..pn (n number of process)

S1, S2 , S3 …….Sn (size of process respectively)

Number of frame allocated to process pi =

[size of process (Si)/ Sum of Sizes(S1+S2+…Sn)] × Number of frame

Active pages

Active page is used by CPU frequently. If active page is inside the main memory then less page fault occurs.

Local allocation

Each process select from only its own set of allocated frames.

Global Allocation

Process can take a frame from another. it select a replacement frame from the set of all frames.

Thrashing

If one process replace the active page of other process then page fault occurs and CPU keep busy in handling the page fault, this is called thrashing.

File System Implementation

File : A file is a named named collection of related information about all files.

Directory Structure of File: A collection of nodes containing information about all files.

- Single level directory

- Two level directory

- Tree structure

- Acyclic graph direct

- General graph directory

Access

- Sequential access

- Random Access

Solution of name collision is two level directories.

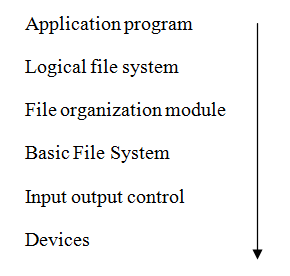

File System Structure

- Layered file system:

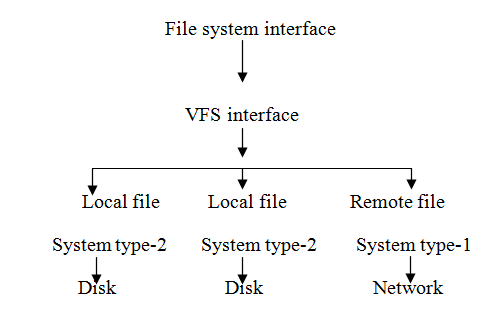

2. Virtual File System: It provides the object oriented way of implementation of file system. It allow the same system call interface (API) for different type of system.

API is to the VFS interface rather than any specific file system

Allocation Method:

How disk blocks are allocated for files

- Contiguous allocation: each files occupies a set of contiguous blocks on the disk

- Non- Contiguous or Linked allocation: each file is a linked list of disk block, blocks may scattered anywhere in disk, No random access, no waste of space.

- Indexed allocation: brings all pointers together into the index block.Random access, mapping from logical to physical, dynamic access without external fragmentation.

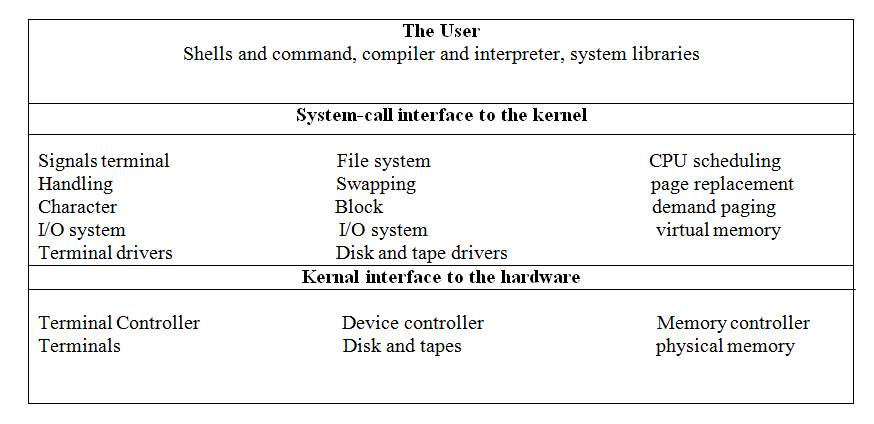

UNIX

Limited by hardware functionality consist of two separable part

- System Program

- Kernal: consist of everything below the system call interface and above the physical hardware.